Forest-to-String SMT for Asian Language Translation: NAIST at WAT

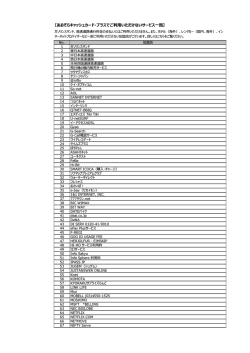

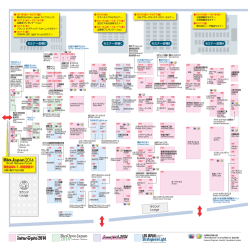

NAIST at WAT 2014 Forest-to-String SMT for Asian Language Translation: NAIST at WAT 2014 Graham Neubig Nara Institute of Science and Technology (NAIST) 2014-10-4 1 NAIST at WAT 2014 Features of ASPEC ● Translation between languages with different grammatical structures 流動 プラズマ を 正確 に 測定 する ため に 画像 を 再 構成 した 。 an image was reconstituted in order to measure flowing plasma correctly . ● We all know: Phrase-based MT is not enough for the accurate measurement of plasma flow image was reconstructed . 2 NAIST at WAT 2014 Solution?: 2-step Translation Process ● Pre-ordering [Weblio, SAS_MT, NII, TMU, NICT] 我々 は 科学 論文 を 翻訳 する ● 我々 翻訳 する 科学 論文 we translate scientific papers RBMT+Statistical Post Editing [TOSHIBA, EIWA] 我々 は 科学 論文 を 翻訳 する we translate science thesis we translate scientific papers 3 NAIST at WAT 2014 This is a lot of work... :( How do I make good Japanese-English preordering rules?! How do I make good Japanese-Chinese preorderering rules?! What about error propagation? What if better preordering accuracy doesn't equal better translation accuracy? 4 NAIST at WAT 2014 Evidence 5 NAIST at WAT 2014 Our Solution: Tree-to-String Translation [Liu+ 06] x1 with x0 VP0-5 VP2-5 PP0-1 N0 友達 PP2-3 P1 と VP4-5 N2 P3 ご飯 を V4 SUF5 食べ た x1 x1 x1 x0 ate a meal my friend x0 x0 ate a meal with my friend 6 NAIST at WAT 2014 Requirements for a Tree-to-String Model Source Sentence Parser Parallel Corpus これ は テスト です 。 データ を 使用 します 。 This is a test . It uses data . Rule Extraction Rule Scoring Optimization Alignments Tree-to-String Model 7 NAIST at WAT 2014 Reducing our work load. X How do I make good Japanese-English preordering rules?! X How do I make good Japanese-Chinese preorderering rules?! What about error propagation? X What if better preordering accuracy doesn't equal better translation accuracy? 8 NAIST at WAT 2014 Forest-to-string Translation [Mi+ 08] S 0,7 VP 1,7 NP 2,7 PP 4,7 NP 0,1 PRP VBD 0,1 1,2 I saw NP 5,7 NP 2,4 DT 2,3 NN 3,4 IN 4,5 DT 5,6 NN 6,7 a girl with a telescope 9 NAIST at WAT 2014 Travatar Toolkit ● Forest-to-string translation toolkit ● Supports training, decoding ● Includes preprocessing scripts for parsing, etc. ● Many other features (optimization, Hiero, etc...) Available open source! http://phontron.com/travatar 10 NAIST at WAT 2014 NAIST WAT System 11 NAIST at WAT 2014 WAT Results First place in all tasks! BLEU 50 40 +13.0 +3.6 +2.2 +15.0 40 +3.8 20 Other NAIST 20 10 0 +28.3 60 +1.8 +2.7 30 HUMAN en-ja ja-en zh-ja ja-zh 0 en-ja ja-en zh-ja ja-zh 12 NAIST at WAT 2014 System Elements Travatar! Same as [Neubig & Duh, ACL2014] Recurrent Neural Net Language Model Pre/post Processing (UNK splitting, transliteration) Dictionaries 13 NAIST at WAT 2014 Recurrent Neural Network LM I can eat an apple </s> ● Vector representation → robustness ● Recurrent architecture → longer context 14 NAIST at WAT 2014 Pre/post processing UNK segmentation (ja-en) Kanji Normalization (ja-zh, zh-ja) 試験 管立て イチョウ黄叶 臭気鉴定师 球 内部 試験 管 立て イチョウ黄葉 臭気鑑定師 球内部 Transliteration (ja-en) Dictionary addition (ja-en) Japan インテック 膿瘍 典型 Japan Intekku apostema archetype 15 NAIST at WAT 2014 Conclusion 16 NAIST at WAT 2014 Future Work LOSE at next year's WAT. (Make Travatar so easy to use that others can use it to make really good MT systems for Asian languages.) Starting soon! Training scripts to be available: http://phontron.com/project/wat2014 17

© Copyright 2026